“... you can find the way to using HPC with ever-growing efficiency.” – interview with Gábor Dániel Balogh, PhD student with HPC Grant.

“... you can find the way to using HPC with ever-growing efficiency.” – interview with Gábor Dániel Balogh, PhD student with HPC Grant.

How would you introduce yourself, and what is your field of research?

I am an engineering IT specialist, currently at the “Pázmány Péter” Catholic University, and a student of the “Roska Tamás” PhD School of Technical and Natural Sciences. During the past 6 years, I focused on parallelisation. My research team studies the way of efficiently implementing parallel algorithms to CPUs and GPUs, and to clusters and supercomputers. Primarily, we work on the implementation of general algorithms that help the work of researchers. We automatically paralellise our simulations so that researchers – be it physicists, biologists, mechanical engineers or whatever – needn’t have to invest a lot of their time to understand how their existing application could be adapted. That is, we develop a domain abstraction.

When and how did you encounter supercomputing?

During the first semester of the MSc program, Dr. István Reguly taught a course on parallel programming at the university, and that’s when I first heard of supercomputers. We saw a broad spectrum there, from CPU parallelisation to GPU parallelisation. I got attracted to it because it involves some challenges – you need to think slightly differently, and it also seemed a bit mysterious. Indeed, I approached him and said that I’d be happy to work on this more profoundly, which later became reality under the framework of an independent laboratory. Since then, I have been working on parallel programming in various fields under his supervision.

Didn’t your previous studies include HPC?

I studied molecular bionics during the BSc program, and parallel algorithms were marginally mentioned in relation to bioinformatics, but we never focused on supercomputers. The MSc program for engineering IT specialists already offers some courses on parallel programming towards the direction of HPC.

What is the role of HPC in your field?

In my field, we essentially focus on how to help others use HPC. For example, two years ago, a fellow in the same year at the university wanted to run spin simulations, and we helped this fellow accelerate the simulation code. Our framework is OP2 and OPS – the latter can be used to describe calculations on any structured network. That is, if we have a simulation that is operational on a square-grid, then you can adapt it to that. And our focus is on how to use it efficiently on both supercomputers and smaller clusters.

Is there an alternative to supercomputers in your professional field?

The question is how big the applications are, and whether you need a supercomputer. There are applications that make full use of an entire supercomputer; others are only paralellised to a portion thereof, even to a single GPU or a CPU node. But the entire research is conducted within the realm of HPC, that is, a supercomputer or a smaller cluster is needed.

Where did you hear about the call for grant applications of the Competence Centre?

If I remember correctly, it was my supervisor forwarding it to me in January, so I had ample time to prepare myself this application.

Why did you apply for this?

My primary motivation would have been to try out Komondor, which could enable us to run large-scale simulations as well. It is an exciting opportunity to get to know a new architecture, and thereby to join the support activities provided for Hungarian users.

With what project did you apply?

In the past, we had a research project for solving tridiagonal systems. This research was extended to pentadiagonal systems under the frameworks of a research grant. The goal was to create an algorithm with good performance in case of high node numbers, that is, something that can be de facto applied in supercomputers.

Will you manage to reach the specified goal?

The research project is ongoing, and we made considerable progress with it, but we still have issues we couldn’t fully resolve yet. But with the help of KIFU, we got some machine time for the supercomputer MARCONI, and the Italians welcomed us with open arms. There are architectural differences, and MARCONI has GPUs of the previous generation, so we are also curious to see the differences in performance.

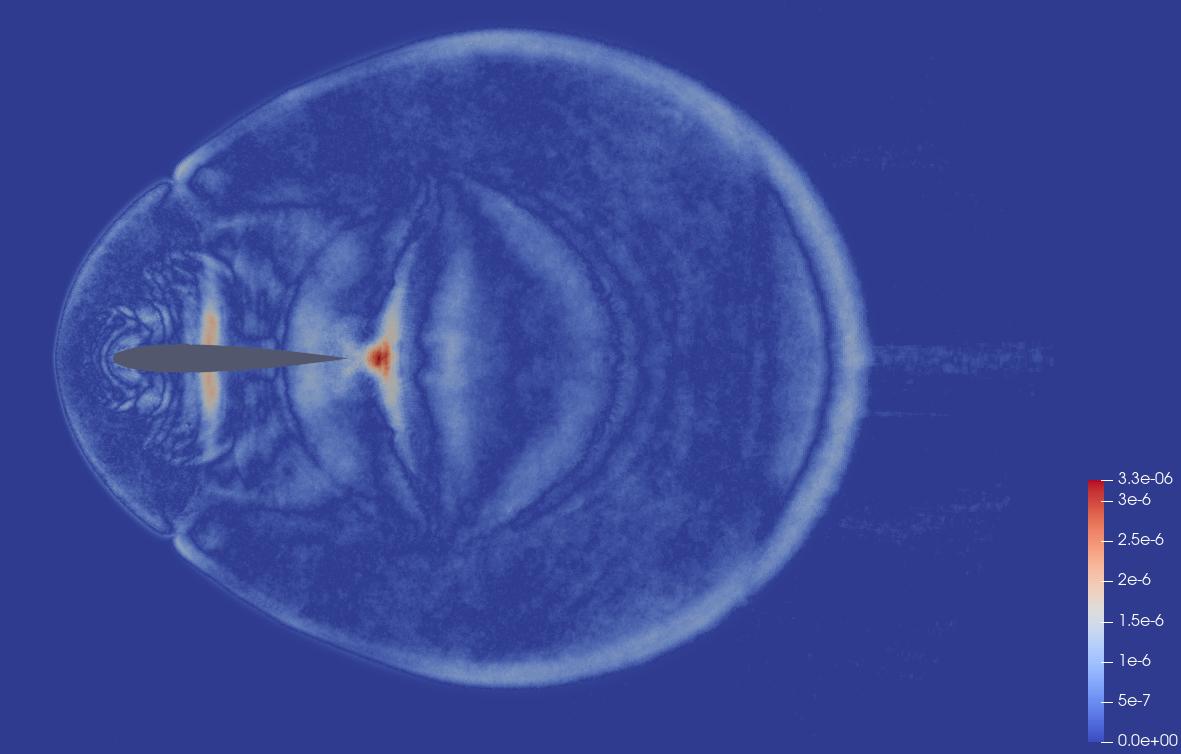

A single és double precisionben végrehajtott szimulációk közötti eltérés egy szárnykeresztmetszet-menti áramlásszimuláció esetén

A single és double precisionben végrehajtott szimulációk közötti eltérés egy szárnykeresztmetszet-menti áramlásszimuláció esetén

To what extent do you think the use of supercomputers is challenging?

We always face challenges with supercomputers. When it’s about smaller applications, the challenge is to run the algorithms within the given node as efficiently as possible. As regards larger applications, interesting challenges include those related to the communication within the machine and to the organisation of the nodes. And our task is generalisation so that we will have good performance when solving the next similar problem.

What makes an efficient supercomputer user?

Primarily, it is motivation. You need to learn a lot, but you can find the way to using HPC with ever-growing efficiency. To start from a farther distance, with the use of a library that helps us understand parallelisation. From a closer look, by learning optimisation techniques, and this knowledge can be continuously extended.

Where does the professional level begin?

The more problems you know, the more new problems you can foresee. I have gathered experience with a lot of problems in the field of HPC, but still there is a huge number of things I would like to try out so that I can really understand how they work.

For whom would you recommend the use of supercomputers?

They can be useful in a great number of fields. Imaging and analysis are obvious examples for medical uses, and applications for the field of personalised medicine are also being developed. You may also gain a lot in more traditional areas of use, such as engineering design simulations, let’s say fluid mechanics. The scope of uses is extremely wide; what we primarily miss out on is to make potential users familiarise with supercomputers as soon as possible.

Do you think of higher education here?

For instance, yes. For example, people acquire a general knowledge of a machine learning environment relatively quickly. They teach their first one or two networks, and then try them out on GPUs too. This step, when you would run something so big which requires huge computing capacities, comes much later in other fields.

How long does it take to learn supercomputing at the basic level?

Not much on the basic level. We have a similar system in place on the university cluster, and you can quickly learn how to use it. Parallel programming and developing applications is more time-consuming. It is already a big challenge to ensure that a simulation could be efficient on an entire supercomputer since there exists a great number of methods and it depends on a lot of things. In case of computing on an entire supercomputer, communication is important because it’s essentially interconnected computers sending messages to each other. The higher the number of computers, the higher the cost of messaging. In order to manage this, one point that we focus on is to localize communication.

So you also need a support team to your supercomputer, right?

Ideally, it helps a lot, for example, to an astronomer. In the past, it was like this: everyone wanted to run simulations of ever-increasing complexity, and thus also learnt the necessary techniques. If you have a supportive team, then the steps of this extra learning could be reduced significantly, or may even be transformed into jumps.

Can you imagine the interaction between the various fields of science?

It is already working very well. There are frequent patterns that emerge during the calculations. These include, for example, the pentadiagonal equation solver, the subject of my present grant application. This type of equation set may also emerge in the case of financial calculations, as well as in fluid mechanics. If the building blocks of the algorithm are modular, they can be adapted to other fields of science.

How do you see the future of supercomputers?

Now that supercomputing finally entered the exascale range, the most exciting question is how to efficiently leverage such a huge computing performance. Growth is also an interesting topic – whether we will be able to keep up this speed of development. I very much welcome the tendency that the objective is also the same here in Europe: to ensure that the resources are used by an as wide range of users as possible.

What do you think of quantum computers?